Helps big organizations detect, analyze and fix advance threats.

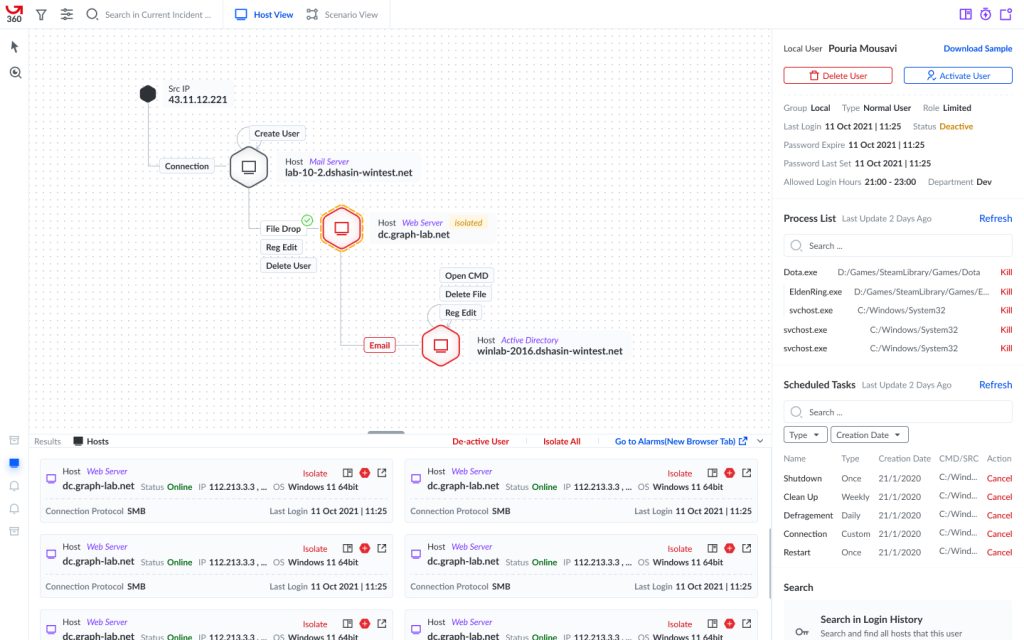

ATD360 is the ultimate weapon for big organizations to detect advanced threats! With its sleek log system, graph visual mode, and threat hunting mode, it makes spotting security threats a breeze. The best part? It provides profiles for all files, connections, registry key values, users, tasks, software, and more! ATD360 has got your back and is the perfect partner to keep your organization secure.

I’ve been working in Graph for 3 years!

My Role

Year 1: ATD Interaction Designer

Year 2 & 3: ATD360 Product Designer

Overall Impact

Total redesign on ATD and createATD360

Combine 4 products to one unique platform

Design and manage an in-house design system named “Graphix”

My Impact

Total redesign of ATD: Research, Data Analysis, Interaction Design, User Flows, Tests

Create a totally new platform and a new workflow: ATD360 was the combination of 4 related but separate products

The Problem

The system generated thousands of security alarms and events. It was impossible for the users to examine all of them, so they sought an automatic pattern detection system.

💡Why ATD360?

The new design, called ATD 360, underwent a complete overhaul from the previous ATD. Our team redefined the workflow for our users, which involved analyzing thousands of alarms to identify patterns and potential attack flows. We automated many of the steps to streamline the process. Additionally, our users worked with four different products, each with its own panel and lots of information to share. Despite this separation, we found ways to connect the products and improve communication between them.

MY ROLE

Product Designer

When it comes to ATD360 I

💡

Why ATD360?

ATD 360 was a total redesign on old ATD. We redefined our persona’s work flow. They were working with thousands of alarms to find a pattern and an attack flow while most steps of this journey could be automated.